by Yuri Suzuki, Olivier Bau and Ivan Poupyrev at Disney Research.

Display and honorary mention at ARS Electronica 2013.

1. Fields (Disciplines):

Sound, HCI

2. Technology

Digital audio processing, biology

3. Interaction

Ishin-Denshin proposes that sound and voice could be transmitted in interpersonal communication through touch. One person record sounds with a microphone and transmits them to another person by touching other's ear. The sound can be transmitted from person to person.

4. Design

The installation consists of a microphone that can record sounds and transmit them through touch. The microphone only records sounds of amplitude higher than a set threshold. A computer creates a loop with the recording and sent it back to an amplification driver. This amplification driver converts the recorded sound signal into a high voltage, low current inaudible signal. The output of the amplification hardware is connected to the conductive metallic casing of the microphone via a very thin, almost invisible wire wrapped around the microphone audio cable. When holding the microphone, the visitor comes in contact with the inaudible, high voltage, low power version of the recorded sound. This creates a modulated electrostatic filed around the visitors’ skin. When touching another person’s ear, this modulated electrostatic field creates a very small vibration of the ear lobe. As a result, both the finger and the ear together form a speaker make the signal audible for the person touched. The inaudible signal can be transmitted from body to body, using any sort of physical contact.

5. Value

This work convert digital audio to physical touch by adopting tricky but smart method that takes advantage of human body's characteristics. It encourages us to develop human's potential capabilities in interaction with digital information.

Reference: http://www.disneyresearch.com/project/ishin-den-shin/

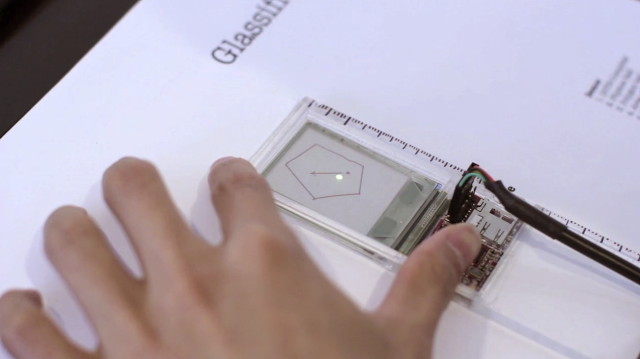

Project #2: Glassified

by Anirudh Sharma, Lirong Liu and Pattie Maes at MIT Media Lab.

UIST 2013 Demo

1. Fields (Disciplines):

TUI, AR, HCI

2. Technology

Algorithms, openFrameworks, physics simulator, Wacom digitizer

3. Interaction

Glassified is a modified ruler with a transparent display to recognise strokes drawed on paper and present virtual graphics with physical strokes. It entitles both physical and digital objects to work together, enriching users to complete more tasks with a regular rules.

4. Design

Glassified prototype consists of a transparent OLED combined with a see-through ruler. A computer is connected to the ruler to do gesture/stroke recognition and sends commands to the display via RS232 communication. By using Wacom digitizer and certain API, it enables to distinguish the difference between the pixel density of the tracking system and the one of the display. On the computer, adopting several openFrameworks applications to solve various problems.

Augmented Reality has been applied within Human-Computer Interaction since 1980s. This project shed lights on AR in daily tools, which inspires us to dig more about the inherent attributes and combine them properly with specific modern technology to serve people's daily work and life.

Reference: http://fluid.media.mit.edu/node/226

No comments:

Post a Comment