Nike Fuel Band

http://www.nike.com/us/en_us/c/nikeplus-fuelband

http://insider.nike.com/us/launch/nike-fuelband/

The Nike Fuel Band is an interesting product as it was developed to track the everyday activity of anyone. This project was geared towards the average man to allow him to have a device that helps prioritize physical activity in everyday life. The fuelband further allows users to reach out to a network to gain motivation from others. The band can be connected to any smartphone by bluetooth, after downloading the free Nike Fuel App. The fuel settings are customized to your age, weight, height, and you set your own fuel goal based on what you want to achieve. There are four settings shown on the band itself; Fuel, Calories, Steps and Time.

This Technology allows people to become aware of the lack or good amount of activity that they experience on a daily basis, and this device helps build awareness to what they should and can achieve.

Technology:

2 Lithium Polymer Batteries(3.7V)

20 color red/green LED

100 white LED

Stainless Steel

Magnesium

Thermoplastic Elastomers

Polypropylene

Bluetooth Connectivity

Pedometer

http://en.wikipedia.org/wiki/Nike%2B_FuelBand#Specifications

Monday, October 28, 2013

Final Project Proposal: Amy

Sensored Sports Ball for Coordination Development

Ideal Project:

http://www.ducksters.com/sports/football/throwing_a_football.php

http://www.thecompletepitcher.com/pitching_grips.htm

problem

A major problem when it comes to playing sports or learning a new sport, is the constant jamming of the fingers, simple injuries that could be solved with the correct hand-eye coordination development.

solution

This idea could be applicable to any sports ball whether that be football, volleyball, baseball, etc. The ball would be lined with an infrared sensor layer that could create a virtual imprint of an athletes hand placement on the ball. The information would be wirelessly sent to a computer, to allow athletes to easily preview their "imprint". Another aspect of the sensor layer is that it would have a force sensor, and calibrator to calculate the force that the ball hits each finger with or the force that the finger hits the ball with. This information would be sent to the computer, computed through a program, and output directions to athletes to help improve their ball control. Programs will need to be developed with standards for each sport. In order for the program to be successful I would need to gather enough information to create a control which all variable data can be calculated against. I would also want the ball to be able to understand the wind patterns, and the temperature, and use these variables to help improve an athletes adjustment to surroundings.

http://www.imaging1.com/thermal/thermoscope-FLIR.html

application

The output would allow athletes to improve their source of error when it comes to their given sport. It will also allow for less injury to athletes, and gives personalized feedback, when a coach may be focusing on another play. This is applicable for someone who wants to improve their skillsets and learn the right way to play a sport, rather than someone who is playing for fun.

Reality:

I will create a volleyball that will record data of what is happening to it. There are 3 variables that I will track to understand the data I receive. The 3 tracks are: set, spike and serve. I will need to learn Zigbee and I will need to teach myself to pipeline the data I receive from the arduino and send it to Processing to visualize the data.

Things I will need:

-Volleyball

- Zigbee

- Pressure Sensors

-Accelerometer

-MPR121 Capacitive Touch Sensor

Areas to Research:

-sports medicine

-interaction design

-athlete sensor data

-tracking athletic movement

Ideal Project:

http://www.ducksters.com/sports/football/throwing_a_football.php

http://www.thecompletepitcher.com/pitching_grips.htm

problem

A major problem when it comes to playing sports or learning a new sport, is the constant jamming of the fingers, simple injuries that could be solved with the correct hand-eye coordination development.

solution

This idea could be applicable to any sports ball whether that be football, volleyball, baseball, etc. The ball would be lined with an infrared sensor layer that could create a virtual imprint of an athletes hand placement on the ball. The information would be wirelessly sent to a computer, to allow athletes to easily preview their "imprint". Another aspect of the sensor layer is that it would have a force sensor, and calibrator to calculate the force that the ball hits each finger with or the force that the finger hits the ball with. This information would be sent to the computer, computed through a program, and output directions to athletes to help improve their ball control. Programs will need to be developed with standards for each sport. In order for the program to be successful I would need to gather enough information to create a control which all variable data can be calculated against. I would also want the ball to be able to understand the wind patterns, and the temperature, and use these variables to help improve an athletes adjustment to surroundings.

http://www.imaging1.com/thermal/thermoscope-FLIR.html

application

The output would allow athletes to improve their source of error when it comes to their given sport. It will also allow for less injury to athletes, and gives personalized feedback, when a coach may be focusing on another play. This is applicable for someone who wants to improve their skillsets and learn the right way to play a sport, rather than someone who is playing for fun.

Reality:

I will create a volleyball that will record data of what is happening to it. There are 3 variables that I will track to understand the data I receive. The 3 tracks are: set, spike and serve. I will need to learn Zigbee and I will need to teach myself to pipeline the data I receive from the arduino and send it to Processing to visualize the data.

Things I will need:

-Volleyball

- Zigbee

- Pressure Sensors

-Accelerometer

-MPR121 Capacitive Touch Sensor

Areas to Research:

-sports medicine

-interaction design

-athlete sensor data

-tracking athletic movement

Saturday, October 26, 2013

Looking Out: Andre

The V Motion Project from Assembly on Vimeo.

This interactive performance piece called The V Motion Project was a collaboration between a variety from various fields at the request of Frucor (makers of V Energy drink) and their ad agency BBDO. The end result was a stunning projection mapped live musical sequencer performed outdoors on the side of a building. More information can be found about the tech here: http://www.custom-logic.com/blog/v-motion-project-the-instrument/

Thursday, October 24, 2013

LookingOut 8: Liang

Project #1 Bubble Cellar

BY Joelle Aeschlimann, Pauline Saglio, and Mathieu Rivier (2013)

Project #2 MoleBot

BY Design Media Laboratory (2012)

BY Joelle Aeschlimann, Pauline Saglio, and Mathieu Rivier (2013)

This is an interactive installation composed of a bubble blower, a projector, wind sensors. It invites users to come near and blow into the blower to create bubbles just as we always do in our daily experience. Instead of creating real bubbles, the installation projects virtual soap bubbles on the wall, which rises with lightness and fragility in the air, leaving us in the expectation of its future explosion. Since it is projected shadows, it allows much more imaginations and wonders, which are turned out to be some animations when the bubbles are exploded.

Project #2 MoleBot

BY Design Media Laboratory (2012)

It is a mole robot built under a transformable surface that allows people to enjoy playful interactions with everyday objects and props, and experience what it feels like to have a mole live under your table. It can undergo many tasks, like pick fruits, lead objects... users are able to interact with it via gestures.

Monday, October 21, 2013

Looking Out: Gaby

Chopsticking

Chopsticking is a two player board game designed to test and improve one's chopsticking skills. While the sushi bowl spins, each player has 30 seconds to pick up as much sushi as possible while accurately holding the chopsticks.

You can find out more about the project at these links:

- Sharpening Chopstick Skills with “Chopsticking” (Maker Fair NYC 2013)

- Chopsticking (Project Page)

Campfire - Final Project Proposal - Austin, Andre

Themes

Concept

Cube or pyramid with light up buttons. People gather around the cube and press buttons to experiment with what happens. Different light and sound patterns will occur depending on the order and combination of button presses. People will naturally be drawn in and want to experiment . There will be a stasis mode of a light pulse of light and sound from the object; a state which it will revert to without stimuli.

Background

Tech

- Campfires are places where people gather

- The outside world melts away

- Encourages engagement

- Interaction

- Engage with materials/food

- Engage with one another

- Observing

- Random pops and light patterns

- We want to create a modern day campfire

- Light

- Sound

- Interaction from 360-degrees

- Engage with other people over the top

- Suspense

Background

-Monome

-Led Light Cubes

Tech

- Arduino

- Max/Msp

- Chronome

Materials

Prototype Specific

- 4x4 PCB LED Boards (x2) - $20

- 4x4 LED Button Boards (x2) - $20

Final Project

- 7x 74HC595- $5-$10

- 7x 74HC165 - $5-$10

- Possibly using adafruit 24-channel LED Drivers -$15 each

- RGB LEDs x80 or x45 - $10

- Arduino

- Acryllic Sheet - $50

- Buttons (either 45 or 80 depending on prototype grid size) - $5-$10

Friday, October 18, 2013

Final Project Proposal: Liang

Project: Inkative

INTRODUCTION

Project Intent

What does the interaction between humans and living creatures looks like? I hope Inkative be such a platform that records and depicts the dynamic interactive process in a tangible way. It is able to convert little creatures' (like gold fishes, bees, flies) reaction to surrounding stimulus into something that can be recognised and described. To some extent, it will provide a novel way for people to observe and learn how to communicate with these little lives.

PROJECT BACKGROUND

The original idea of this project came from a episode of film "Sherlock Holmes" (here). Out of curiosity about how people's behaviours or environmental changes affect little live creatures' behaviours, I started to read and review literatures and works of other researchers and artists. Here lists some of them:

David Bowen's fly works

fly revolver (2013) [link]: A revolver is controlled by the activities of a collection of houseflies, which are monitored via video in an acrylic sphere. The revolver aims in real-time according to files' relative positions on the target. If a fly is detected in the center of the target the trigger of the revolver is pulled.

fly drawing device (2011) [link]: Based on the subtle movements of several houseflies, a artificial device is made to produce drawing on a wall. The drawing is composed of random and discrete strokes. There is a big sphere with a chamber on the top. When flies enter the chamber the device is triggered to begin the drawing.

It is innovative light artwork and also a community of friendly intelligent lights that influence one another. The fireflies are programmed to respond to light from their neighbours. In this community, popular fireflies become highly influential, whilst isolated ones must work harder to reach their friends. People can speak the same language and influence the interaction between community members by shining lights on these fireflies.

In conclusion, these works can be placed into two categories: bio controller and interactive installation. The former only focuses on what kind of outputs can be produced by animals' behaviours, lacking the ability to observe how environment can affect their behaviours. Therefore, it is more like a one-way system which addresses the variations of outputs but ignores the interactive input from ambient stimulus. Contrarily, the latter category focuses on how to simulate creatures with modern technology and fabrication method. It presents an interesting way to explore how animals' behaviours and habits affect people's social activities. So in most cases, installation art is the best form for this kind of system which invites audience to participate in. I hope to create a device based on the ideas of above works, enabling users to interact with animals, recording the dynamic interactive process, producing something can be perceived and studied by humans, and finally helping people better understand the communications with the other living friends on the planet.

INITIAL DESIGN & IMPLEMENTATION

Prototype

The project currently aims to observe the interactions between people and little animals. So here the application is about gold fish. The system looks like a stage, the fish tank can be placed on the stage. Two cameras are used to track the positions of gold fish in the fish tank and deliver data to the computer. Beneath the stage there is a chamber which looks like a CNC. In this chamber it will control a brush moving in 3D space to draw on a ink paper. Unlike CNC, it is has circular structure. A stepper motor is set at the center position enable the upper circular panel to spin. A steel rod is used as a bracket to support another stepper motor to drive the brush. With these two motors and such structure, the brush can go anywhere on a circular plane theoretically. The brush is mounted on a device which is driven by a servo, so that it can be lifted up and down to indicate the animal's vertical position. The form of drawing is Chinese traditional painting because it can reflect the animal's 3D position through the pressure and the amount of water of the brush. In addition, to address the interaction, proximity sensors are used to detect if there are people standing around the platform.

This is a initial sketch for this prototype:

The diagram of the system is:

Materials

Required: masonite wood, steel rod, ink paper, Chinese calligraph brush, acrylic board, felt

Maybe: PLA

Hardware

Required: Webcam, proximity sensor, stepper motor, servo, Arduino, computer, resistor, LED, jumper wire

Maybe: Raspberry Pi

Required: Arduino, openFrameworks, openCV

Fabrication Processes

Laser cutting all plates and parts that the stage needs, including the brackets, the base, the circular plane, gears and any enclosures. For those laser cutting cannot satisfy 3D printing may help.

Budget

Name Price Number

Webcam $5.09 2

Masonite wood $39.95(12''*12'') 1

Proximity sensor $13.95 4+

Arduino Uno $29.95 1

Brush $4.38 1

Ink paper $2.00 n

Stepper motor $16.95 2

Servo $8.95 1

Acrylic $15.00 1

Felt $14.30 1

10/14 - 10/20: schematic design, prototype scope, sketches, technology and material exploration, diagrams.

10/21 - 10/27: components purchase, openCV test in openFrameworks, finish object track and location in 3D environment.

10/28 - 11/03: physical structure design and fabricate all parts, mount circuit, test on stepper motor.

11/04 - 11/10: mount all part and test the mechanism, test on servo.

11/11 - 11/17: complete circuit logic, including the combination of stepper motor and servo, mesh up CV data and circuit program attempt 1.

11/18 - 11/24: mesh up CV data and circuit program attempt 2, andtest the entire system.

11/25 - 12/01: refine design, development and mechanism, test and prepare for final presentation (shoot video demo and final documentation).

Documentations go through the entire design and development process.

Challenges

1. Track fish's position with openCV and openFrameworks.

2. Data processing. Convert location(x,y,z) into the angles applied for stepper motors and the servo.

3. CNC-like mechanism design and fabrication.

Inkative is a platform that records the dynamic interaction between people and fish and converts it into Chinese traditional painting. People are able to study the fish's reaction to ambient stimulus from these paintings. In the future, it can also be applied for other animal, like bees, flies and etc. It has large potential value in assisting people to understand how animals behaviour changes as the environment alters.

Final Project Proposal-----Wanfang Diao

Option One

Music cubes

Description:The Music Cubes is a set of toy designed for children to explore sound, notes, rhythm. Each cube is mapped to a certain pitch,position mapped to rhythm. Children can create a piece of melody by put the cubes in a circle.

Goals:

1. Simple & direct

2. Educational

3. PlayfulTimeline:

week1&2: Build circuits to make sounds first and then try to the recognition part

week3: Focus on the size/appearance

Laser cut the box and the plate

Continually improve the circuits: add the recognition part(change pitch part record)

week4: Counterpoint /Other development/make more cubes

week5: Put them together and let children try it.References are in slide below.

Final Project Proposal: Gaby

Goal

Cakes are delightful to make and delicious to consume. They can be used to congratulate, celebrate, and console. However, making a good cake can also take more time than we have. This project proposes an experience where a person can interactively design his or her own cake and pick it up within minutes.

Concept

This project will scope itself to building an interactive tool or buddy that will guide a user in a part of the cake design process.

Similar Work

Materials

These will be determined in the first week of ideating and paper prototyping according to the kind of aid we want to provide to the user.

Timeline

1 - ideating and early paper prototypes

2 - material list and first rough but functional prototype

3 - iterating prototype

4 - checkpoint: functional prototype

5 - out in the world, all core functionality

6 - out in the world, polish

7 - out in the world, polish

8 - final documentation

Looking Out 7: Chin Wei

The Popinator

by Thinkmodo

A device that allows a person to eat popcorn without having to use hands. It is triggered by the word "pop", uses that sound to locate the person's mouth and air pressure to project the popcorn. It was built for a viral video campaign for Popcorn Indiana rather than as a standalone product.

by Thinkmodo

A device that allows a person to eat popcorn without having to use hands. It is triggered by the word "pop", uses that sound to locate the person's mouth and air pressure to project the popcorn. It was built for a viral video campaign for Popcorn Indiana rather than as a standalone product.

Final Project Proposal.: Ayo

Final Project Proposal.: Ayo

Viu-It's Alive!

Project Vision

The goal of this project is to facilitate a playable experience using audio, video animation and the human body as mediums. To this end, participants will engage with the project by walking, jumping and running around an interactive room to explore what secrets it offers and the subtle ways it comes alive.

Similar Previous Work

The inspiration for this project is the idea of giving a form or voice to the mundane and largely invisible. As such, here is a sampling of projects I believe accomplished this idea very well:

Electricity Comes from Other Planets

Creators: Fred Deakin, Nat Hunter, Marek Bereza, James Bulley for Joue Le Jeu(Play Along) exhibition.

Propinquity

Creators: Lynn Hughes, Bart Simon, Jane Tingley, Anouk Wipprecht, Marius Kintel, Severin Smith

Mogees - Play the world

Creator: Bruno Zamborlin at Embodied AudioVisual Interaction team (EAVI), Goldsmiths.

Implementation Details

Anticipated Hardware

- Microsoft Kinect or available Depth camera

- Short-throw Projector

- Low-cost micro-controller

- Wireless RF modules: Xbee or Bluetooth

- 3-axis accelerometer

- Laptop computer

- RGB LEDs

My approach to the mechanics of the gameplay will be to use simple actions and behaviours that can scale in order to produce emergent complex behavior. This would produce the illusion that the environment is alive and responding to participant input.

Ninja: The goal is to avoid scan lines (radar pattern) that will be projected toward participants. It would encourage walking, running and jumping to evade the scan lines as they approach.

Single-Player Gameplay: The computer would project the scan lines that the lone user proceeds to evade from.Human Synth: The goal is to create music by moving around the interactive room. This is achieved by mapping the 2D space of the room to frequency on one axis, and pitch on the other.

Multi-Player Gameplay: Participants generate these scan lines themselves and proceed to tag other participants who are free to evade or generate their own scan lines to cancel the approaching scan lines.

Single-Player Gameplay: The lone participant creates music by walking, running and jumping to different locations within the room.

Multi-Player Gameplay: Each new participant adds an ddditional instrument and effect to an unknown location in the room. Thus music-generation is now not only crowd-sourced, but involves moving around to various locations to discover what sound is produced.

Project Scope & Rough Timeline

I will try to strictly adhere to the suggested timeline for final projects. Thus, the first two weeks will be devoted to brainstorming, and research. Development will begin in the third week leading into debugging and testing from week 5. The last week (week 8) will be devoted solely to appropriate documentation (video and otherwise).

Deliverables

- LEDs enclosed within laser-cut cubes that can be activated remotely.

- Battle-tested gameplay mechanics

- Fully functioning software to enable Kinect to sense participants and project appropriate game information.

- Schematics, Documentation

- System Demo

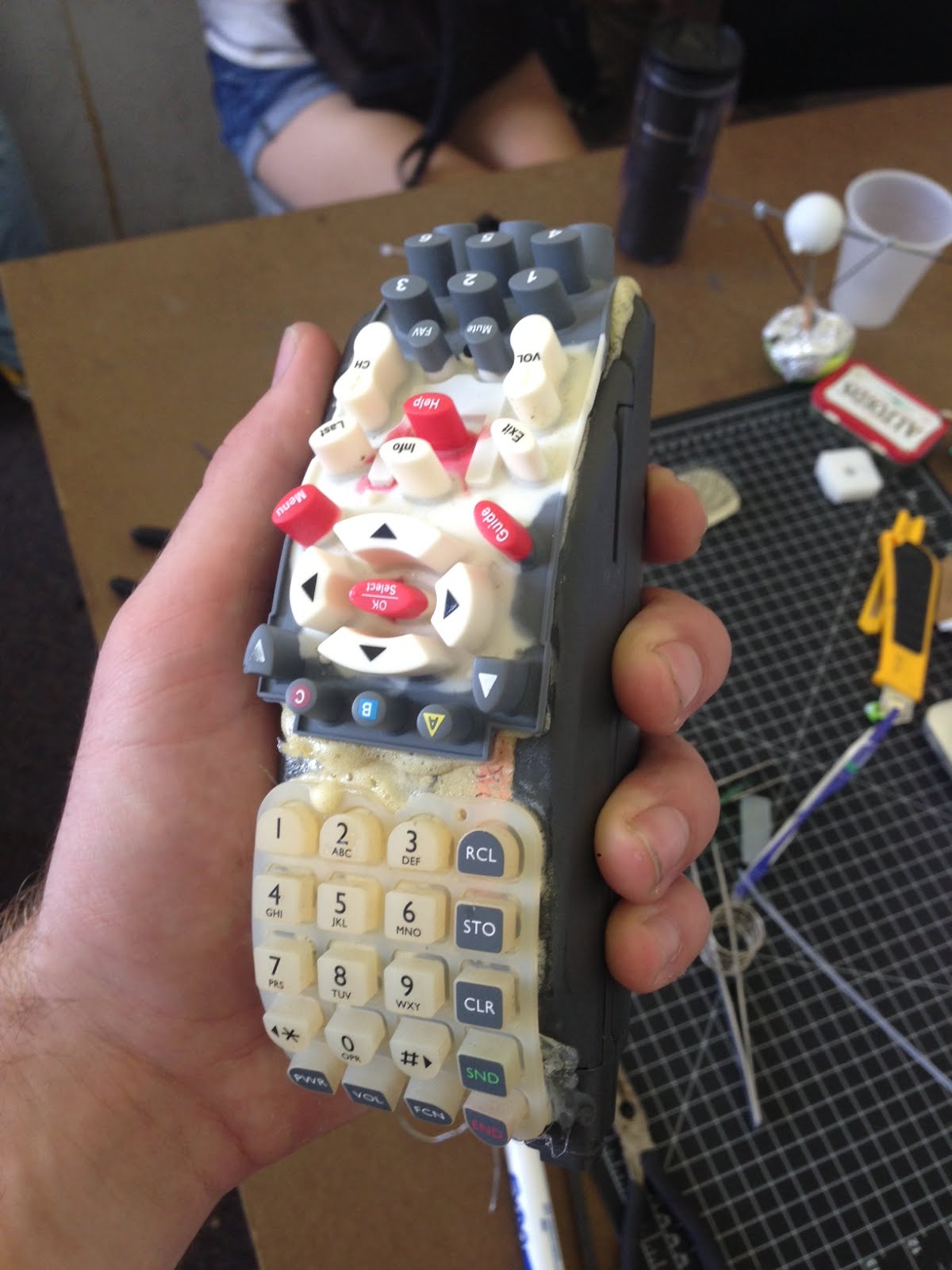

Final project proposal: Mauricio

Haptic feedback and actuation for power tool control

Objective

The overarching purpose of my project is to improve haptic feedback mechanisms in order to provide an intuitive and precise way of controlling large scale power tools with hand gestures. The inspiration comes from the dominion of sculpting, taking into consideration the wonderful precision sculptors reach by modeling materials with their own hands or with hand-tools. This kind of precision is not possible in a “direct way” (meaning modeling the piece in real time such as in clay modeling) when dealing with large scale pieces or difficult materials to work with. On the other hand, mass production tools such as industrial robotic arms with several degrees of freedom might be able to achieve this tasks, yet it is my opinion that pieces produced in this way lack a “soul” component to them. The purpose is then to allow the artist to have a very “expressive” way to control a robotic arm, ir order to leverage both the power/precision of the machine but also the uniqueness and “happy accidents” of a human produced piece. The thesis is that this “very expressive way” may be achieved by gestural control of the machine, mimicking as much as possible the original contact between hand and materials. Examples of gestural control of robotic arms are readily available, yet they always (in my opinion) seem to address extremely simple tasks such as grappling objects, moving them from point A to point B, and similar. The reason I think most systems are not capable of achieving greater precision is because gestures are performed in free air, neglecting the tactile feedback that direct contact gives us, and for which millions of years of evolution have prepared our sense of touch and muscles. My intent is to test a method of adding haptic feedback to a gestural control mechanism for a robotic arm and performing user studies to determine if it enhances or not the interaction.

Scope

It has been discussed with the faculty leading this course that the project will be broken down in pieces, because of its complexity and also to better adjust the academic requirements of different courses. Hence in “Making Things Interact” the mission will be to create a simple feedback & control mechanism not for a full fledged industrial robot, but for a much simpler tool, for example a drill press or similar. The system must allow for:

- Control of some aspect of the machine

- Haptic feedback

Implementation plan

I will:

- Either embed a glove with an Innertial Motion Unit (IMU) for position or acceleration/rotation tracking and also with vibrators for feedback, or get something equivalent in the market, something that I can hack.

- Create a few degrees of freedom control with the glove for a simple power tool, e.g. control the height of a drill press by actuating over its lever. The actuation might be as simple as with a motor and a piece to attach the axis to the lever of the drill.

- Create a control loop so that the glove sends data to the actuator and in turn measures data that allows it to show basic haptic feedback. For example, the current of the motor actuating on the lever may be measured, hence determining more/less torque, which is related to the head of the drill press touching or not the material being drilled. Certain current level will trigger the vibrating response of the glove, completing the feedback loop.

Notes

A way to embed a high end IMU and vibrating feedback into the glove in a convenient form factor, timely and tidy manner may be by attaching a smartphone to it, hence this avenue will be explored.

Since the example input is acceleration/rotation of the hand and the intended feedback vibration, the feedback itself might make the input to the system really noisy. This may be:

- Exactly what needs to happen (think of when you are going really hard against a material with a power tool: it may vibrate in a way that makes it difficult to retain precision)

- Not desirable (based on user assessment). For this project I think this could be addressed by having 2 gloves, one for vibration and the other for input, or filtering the input in a way that the vibration is omitted.

Deliverables

- Glove with vibrating feedback and sensors (does not need to be IMU based but that is the plan for now) for controlling an actuator for a power tool.

- Working code, schematics, documentation, etc.

- Demo

Final Project Proposal 2.0: Ding

Final Project Proposal 2.0

EnSing

Project Intent:

Basically, the purpose of this project is to build a portable radio/music player box in the home environment which could be placed in the desk or be attached on the wall and could sense its surrounding environment and recommend specific songs to users. This idea originates from the opinion that sometimes environment could be a good context and could generate certain "mood" to transfer into music.

Reference Project:

Skube by Andrew Nip, Andrew Spitz, Ruben van der Vleuten, Martin Malthe Borch

Frijilets by Inbal Lieblich, Umesh Janardhanan and Andrew Stock

Jing.FM by Kaiwen Shi

Music could also has emotion. People find specific songs according to their description.

Sadly By Your Side

EnSing

Project Intent:

Basically, the purpose of this project is to build a portable radio/music player box in the home environment which could be placed in the desk or be attached on the wall and could sense its surrounding environment and recommend specific songs to users. This idea originates from the opinion that sometimes environment could be a good context and could generate certain "mood" to transfer into music.

Reference Project:

Skube by Andrew Nip, Andrew Spitz, Ruben van der Vleuten, Martin Malthe Borch

Frijilets by Inbal Lieblich, Umesh Janardhanan and Andrew Stock

- Omnion by Ana Catharina Marques, Kostantinos Frantzis, Markus Schmeiduch, Momo Miyazaki

Jing.FM by Kaiwen Shi

Music could also has emotion. People find specific songs according to their description.

Sadly By Your Side

Brief description:

This device consists of two parts: the body and a lot of different caps (gadgets). The body is the core to get sensor data, process information, transmit and receive music. The caps are sensitive to different type of context signal. Using different cap will capture corresponding environmental information. Basically, there are four types of caps.

(1) Control caps: get the signal from an accelerometer/tilt sensor to play the next song and volume.

(1) Control caps: get the signal from an accelerometer/tilt sensor to play the next song and volume.

(2) Context data caps: get some low level sensor data such as smell, color, temperature, speed and so on.

(3) Human activity caps: get people's facial expression when people come by or listen to people's humming for certain melody to get more similar songs.

(4) filter caps: this type of caps will bring some effects to the song, such as changing the pitch or timbre, or even some funny sound during the playing of a piece of song.

Moreover, this device has two modes: the search mode and generate mode. In the first mode, input data will be transformed into some specific tags according to its type and value; then these tags are used to described a series of songs to users. As to the second mode, people use several different caps to change the input data in order to create a piece of generative music. For example, with a light cap to sense its surrounding light and a video cap to record the emotions of people, the changes of input data will generate different non-repeated sound.

Sketch:

Diagram:

Sketch:

Diagram:

Hardware:

Plan I: Sensors + Arduino/Teensy + Rasbery Pi + Wifi adapter

Plan II: Sensors + Arduino/Teensy + xbee/wixel + sensors(transmitting circuit) + PC/mobile phone

Software (Under investigation):

Plan I: Python + Arduino IDE + MAX + JingFM/Last.fm API + Ecoprint API

Plan II: Processing + Arduino IDE + JingFM/Last.fm API + Ecoprint API

Technology keywords:

Laser cutter + tangible control + context sensing + modular player

Goal:

Finish the main body building, at least three caps (controller, environment, listening) under two different mode. Try to develop more scenarios and

Plan I: Sensors + Arduino/Teensy + Rasbery Pi + Wifi adapter

Plan II: Sensors + Arduino/Teensy + xbee/wixel + sensors(transmitting circuit) + PC/mobile phone

Software (Under investigation):

Plan I: Python + Arduino IDE + MAX + JingFM/Last.fm API + Ecoprint API

Plan II: Processing + Arduino IDE + JingFM/Last.fm API + Ecoprint API

Technology keywords:

Laser cutter + tangible control + context sensing + modular player

Goal:

Finish the main body building, at least three caps (controller, environment, listening) under two different mode. Try to develop more scenarios and

Thursday, October 17, 2013

Final Project Proposal: Yang&Meng

Final Project Proposal: Yang&Meng

Heart Pulse

A sculpture that senses, express and transmits heart pulse

Project intent:

Our idea is to express people's emotion by using heart pulse.

Hearts are powerful and personal. They are also symbols of our emotion in many different culture. Moreover, unlike other signs such as fingerprint or eye colors, heart is dynamic and changeable, which gives us so much imagination to create interactions with heart.

"May be I cannot see you, but I can feel you heart."

A sculpture or a wall that can sense, express and transmit heart pulse to make people feel being connected with others.

Inspiration:

Pulse Drip:

Pulse Spiral:

www.lozano-hemmer.com/pulse_spiral.php

Design:

1. interactive wall in urban area

2. Interactive sculpture

3. hearts beat game

We design to use sensor to visualize the power of human. People may use their heart power to PK. The interaction surface will show their power by the lightness of LED.

So, it works as a game. Two people may stand at the different side of the instrument. When they put their hands on the instrument. Their hearts' beating could make different patterns and PK with each other.

Value:

- Sharing and Communication: I cannot see the person behind the wall but I know there is a person sharing his heart pulse

- Feeling warm: Create a feeling of being connected by other people (for people living in urban area)

- Interaction : Study how visualized heart pulse can affect people's emotion or be used in communication

·

· Technology solution:

- LED

- Projector

- Foamed Plastics

- Pulse sensor

- IR sensor

Technology feasibility:

http://www.youtube.com/watch?v=6iWq-hRIkSc

http://www.youtube.com/watch?v=BAp1snPchT4

Subscribe to:

Posts (Atom)

.jpg)