EnSing comes from the idea of building a context sensitive and PC independently music player in people’s home environment. EnSing is made up of two parts: the central hub BODY and detachable blocks with magnets CAPs. Individual CAP could sense or be designated to a parameter of context or users, light intensity and users mood for example. A CAP has to be attached to the BODY to work. Tapping the head of BODY or after playing a song, BODY updates values of CAPs directly or implicitly.

Key Technology:

1. Web music request search and play via Openframeworks2. Music playing and control under Raspberry Pi

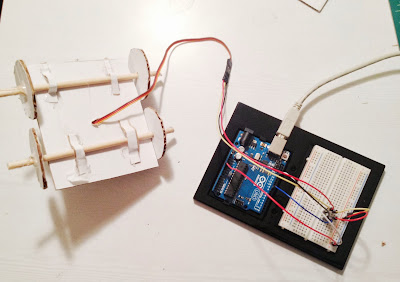

3. Arduino control for sensors

4. Communication between Raspberry Pi and Arduino

5. Detachable physical structure design

6. laser cut and 3D printing